Rana el Kaliouby: why technology needs emotional intelligence /

Contagious speaks to Dr Rana el Kaliouby, computer scientist and CEO of emotion measurement technology company Affectiva, about why technology must be more emotionally intelligent

Phoebe O’Connell

/

Dr Rana el Kaliouby is on a mission to humanise our interactions with technology. A pioneer in the field of ‘emotional artificial intelligence’ – or ‘emotion AI’ – the former MIT research scientist founded Affectiva (an MIT Media Lab startup) in 2009 to explore how machine learning, combined with masses amounts ofdata, could identify and respond to human emotions.

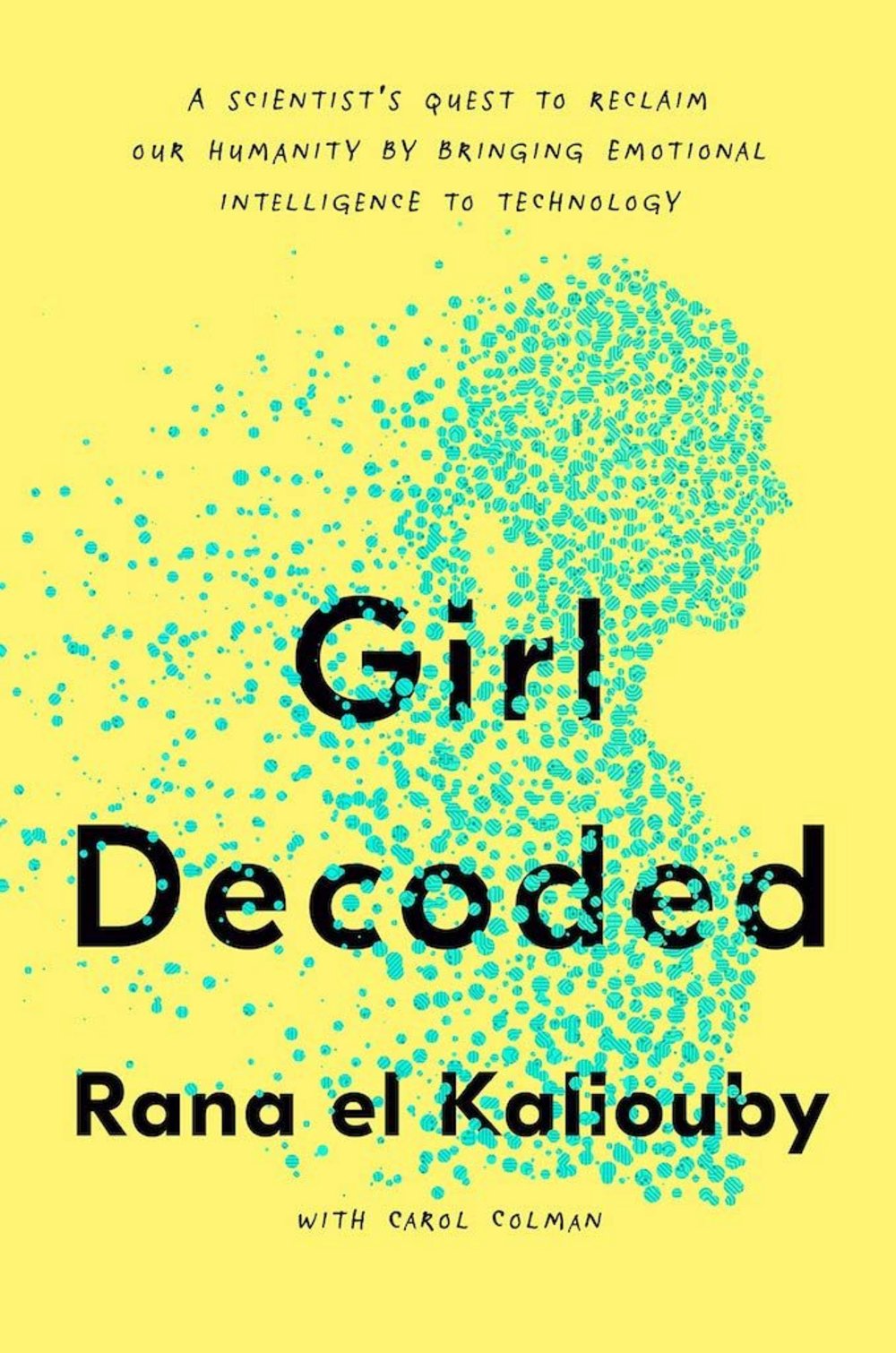

In her new book, Girl Decoded: A Scientist's Quest to Reclaim Our Humanity by Bringing Emotional Intelligence to Technology, which is part-memoir, part-entrepreneur’s manual,el Kaliouby recounts what inspired her interest in emotion AI and looks to a future in which people’s interactions with technology will be more human.

Contagious spoke to el Kaliouby to find out more about how emotion AI could be used to transform everything from automotive safety to mental health programmes, why it’s useful for marketing, and how it could help connect people during times of social distancing.

How do you define emotion AI and why do we need it?

If you think about what makes a person intelligent, it's not just your IQ or your cognitive intelligence, it's your emotional intelligence as well. It's how tuned into people you are. Can you understand people's nonverbal cues? Can you respond to those and adapt in real time? That's what makes people more emotionally intelligent. And we know from years of research that people who have higher EQs [emotional quotients or emotional intelligence] tend to be more persuasive, they're more likeable, they're more effective managers, they're more effective partners, you name it. I believe that that is true for technology as well, especially devices that need to interact with people on a day to day basis. And so my core belief is that technology needs EQ. And the way we do that is we build algorithms that can understand people's nonverbal communications, like your facial expressions, your gestures, your vocal intonation, through activity, and allow the device to take in all of that data and adapt in real time.

Do you have an ultimate vision for this technology?

We will interact with technology, just the way we interact with one another, through perception and conversation and empathy. That's the ultimate goal here: that there will be a vision of the universe where technology just interacts with us the same way we interact with one another. But more practically, I am very passionate about the application of this technology to mental health. Today, the way we measure mental health is based on subjective self-reports. And I believe there's an opportunity to bring in your facial and vocal biomarkers as indicators of depression, even suicidal intent or anxiety.

Of course, in the times we’re living in right now, there’s this sense of loneliness and isolation – even though we’re all connected on our devices, it’s an illusion of a connection. And it’s because we’re missing all of this rich non-verbal communication that we usually apply when we're together in a face-to-face context. So I do believe there’s an opportunity with emotion AI to bring this kind of deeper level of connection when we are online.

How have you seen attitudes towards AI, and particularly emotion AI change since you first started working in the field?

I've been doing this for now 20 years, first as an academic, and then, over the past 10 years running Affectiva. When we first started, people really did not understand the role of emotions in our life, forget about the role of emotions in technology. I remember when Rosalind Picard, my co-founder, and I were pitching investors to the company, we would avoid the word emotion at all costs, we used to call it the ‘e word’. We even called the company Affectiva, because 'affect' is a synonym of emotion, but it's less feminine. I think all that has changed over the past six or so years.

Especially when it comes to brands and media and content, there is recognition that emotions drive consumer behaviour: it drives word of mouth, it drives purchase intent, it drives actual sales and marketing decisions and purchase behaviours, it drives our memory (it invokes or informs what we do and don't remember). There's been this shift towards trying to understand and quantify the subconscious emotional responses of audiences, users or consumers to products or services, to content, to advertising, to be able to use that to inform decision-making.

One of your first commercial partnerships was with communications company WPP. Why is your technology a valuable tool for marketers?

WPP first got in touch with us in 2011. We had done a mini experiment on Forbes' website where we crowdsourced people's responses to a couple of Super Bowl ads. We told people, 'Turn your webcam on. We're going to measure your responses. You can look at how you responded versus everybody else in the world who also watched the same ad.' It was the first instance of this idea of collecting people's responses over the internet and it caught the attention of WPP, who are, of course, in the business of trying to quantify the emotional engagement viewers have with content.

WPP ended up being a strategic investor and continues to be our largest customer and partner. Now, through them and other similar research agencies, we work with a third of the Fortune Global 500 companies and we're deployed in 90 countries around the world. We test about 30 to 50 ads a day now, and it's all automated. We've tested about 50,000 video ads to date and we're able to capture over nine and a half million responses. And we've tested about 50,000 video ads to date. So it's a lot of data and it's fascinating to see the trends, like how ads are becoming more tear-jerking and emotional.

We know from years of research that people who have higher EQs tend to be more persuasive, they're more likeable, they're more effective managers, they're more effective partners, you name it. I believe that that is true for technology as well.

Rana el Kaliouby, Affectiva

When Contagious spoke to Affectiva CMO Gabi Zijderveld afew years ago, she explained that Affectiva's emotion recognition technology would allow advertisers to personalise the way that automated content is served. How do you see this personalisation working?

Imagine the two of us are watching a Netflix show, but we have different interests. I like the romantic scenes, maybe you like the action scenes. And you can imagine how, based on our emotional responses, it's almost a 'choose your adventure' kind of game. It takes a different path, depending on what you resonate the most with. There are opportunities for content recommendation – if I'm on Netflix, and it's clear from my emotional engagement metrics that I like a particular type of movie, then you could recommend similar content without me having to answer countless surveys. You could just clue into what I am most emotionally engaged with.

In the book you describe how when you were first pitching Affectiva to investors they were often put off by your long list of potential use cases. What would you say the focus is today compared with when you started out?

The list of use cases continues to grow. We routinely get approached by all sorts of people who want to use this in all sorts of ways. About five years ago, we entertained all of them, but we quickly found that that was a recipe for disaster. We had a version of our technology you could license. We called it 'emotion-enable your app or your device'. As a company, you do have to focus and solve a specific problem for somebody. So we're now very focused on the media analytics space, which is where we started. And we're continuing to see a lot of growth in that application – everything around audience response, understanding brand loyalty and brand perception. Automotive is the other area that we're very focused on. [The company has developed technology that measures facial and vocal expressions to detect potentially dangerous driving behaviour such as tiredness or distraction and prevent road accidents].

Photo by Lianhao Qu on Unsplash

What are you working on at the moment that you're excited about?

Especially given the times we're in, as we're all working from home and as we all crave this feeling of human connection, I feel like there's an opportunity to bring our technology into livestreamed virtual events like a virtual conference or webinar. I've been giving a lot of webinars and it's really unsettling because you're presenting to hundreds of people, but you can't see them and you have no idea how engaged they are in the content. Imagine if you could capture all of the audience's responses in real time and share that with everybody and create the sense of a shared experience. I'm excited about that idea and trying to move that forward and finding the right partners for that.

Throughout Girl Decoded, you talk about the importance of data privacy. How did you know, when Affectiva was just beginning, that privacy concerns and trust breaches would become one of the biggest issues facing tech companies today?

We recognised very early on, when we were starting the company 10 years ago, that this type of data (understanding people's emotional and cognitive responses to stuff around them) is very personal data, sometimes even as personal as it gets. To encourage people and build trust to share this type of data we had to make data privacy and consent one of our core values.

Rana el Kaliouby, Affectiva

What do you think businesses should and could be doing to ensure consumer trust?

There are a few things. First of all, is this concept of really implementing consent and opt-in and doing that with transparency. A lot of the systems where you have to opt in now, the language is legalese, it's pages and pages of terms and conditions. As a consumer, you don't quite understand what exactly is being collected, how the data is being used, who has access to it – it's really opaque. I feel like tech companies need to be forthcoming, transparent and honest about how the data is being used. When we ask for people’s consent, for example, to turn their cameras to share their emotional reactions, of course, we have the legalese, but we then simplify it in a three sentence consent like, 'Turn the camera on, now we're going to do this, and here's who is going to have access to your data.'

The other thing is this idea of power asymmetry. Tech companies and organisations own all of this data, and they are able to leverage it in all sorts of ways. What about the consumer who's sharing this data? What's in it for me? What value do I get in return? I think we need to rebalance that power asymmetry a little bit.

In Girl Decoded you talk about a time when you were strapped for funding but turned down a lucrative offer from an intelligence agency. What have you had to sacrifice for your values around trust and privacy?

When we started the company there were so many applications of this technology and we wanted to make sure that for us as a business, we live by our core values, which meant that there were some industries we would turn down like detection and surveillance and security, even though it's probably a lucrative market.

We now organise an annual summit [Emotion AI Summit] to build an ecosystem around this [emotion AI] technology. We advocate for the cases where it could be a lot more beneficial, like mental health or automotive, and try to steer people away from focusing on these other use cases.

To subscribe to Contagious Magazine, a quarterly publication filled with the most creative ideas and sharpest insights from the world of marketing and beyond, click here.

Want more of the same? /

We don’t just write about best-in-class campaigns, interviews and trends. Our Members also receive access to briefings, online training, webinars, live events and much more.